YOLOv5实时目标检测

基于rknn_model_zoo/examples/yolov5/cpp进行改造,采用gstreamer采集usb摄像头数据,并通过yolov5模型进行实时目标检测。最后通过mediamtx将检测结果推流到rtmp服务器。

环境准备

1、板端rtsp推流 根据rtsp章节,配置gstreamer、mediamtx的运行环境。

2、板端准备好要修改的rknn_model_zoo代码

git clone https://github.com/airockchip/rknn_model_zoo.git --depth 1

3、yolov5模型 根据RKNN上手指南,将yolov5模型转换为rknn模型,并将模型拷贝到板端。

代码修改

可以通过交叉编译rknn_model_zoo的yolov5示例代码,再拷贝到板端进行测试。如果板端CPU性能足够,可以直接在板端进行编译。本文采用板端编译的方式。

做好原有代码的备份,避免修改错误导致编译失败。

1、修改rknn_model_zoo/examples/yolov5/cpp/CMakeLists.txt,添加库的依赖,解决编译错误问题。

CMakeLists.txt

cmake_minimum_required(VERSION 3.10)

project(rknn_yolov5_demo)

if (ENABLE_ASAN)

message(STATUS "BUILD WITH ADDRESS SANITIZER")

set (CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} -fno-omit-frame-pointer -fsanitize=address")

set (CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} -fno-omit-frame-pointer -fsanitize=address")

set (CMAKE_LINKER_FLAGS_DEBUG "${CMAKE_LINKER_FLAGS_DEBUG} -fno-omit-frame-pointer -fsanitize=address")

endif ()

set(rknpu_yolov5_file rknpu2/yolov5.cc)

if (TARGET_SOC STREQUAL "rv1106" OR TARGET_SOC STREQUAL "rv1103")

add_definitions(-DRV1106_1103)

set(rknpu_yolov5_file rknpu2/yolov5_rv1106_1103.cc)

#dma

include_directories(${CMAKE_CURRENT_SOURCE_DIR}/../../../3rdparty/allocator/dma)

elseif(TARGET_SOC STREQUAL "rk1808" OR TARGET_SOC STREQUAL "rv1109" OR TARGET_SOC STREQUAL "rv1126")

add_definitions(-DRKNPU1)

set(rknpu_yolov5_file rknpu1/yolov5.cc)

endif()

add_subdirectory(${CMAKE_CURRENT_SOURCE_DIR}/../../../3rdparty/ 3rdparty.out)

add_subdirectory(${CMAKE_CURRENT_SOURCE_DIR}/../../../utils/ utils.out)

set(CMAKE_INSTALL_RPATH "$ORIGIN/lib")

file(GLOB SRCS ${CMAKE_CURRENT_SOURCE_DIR}/*.cc)

# Find GStreamer and its components

find_package(PkgConfig REQUIRED)

pkg_check_modules(GSTREAMER REQUIRED gstreamer-1.0)

pkg_check_modules(GSTREAMER_APP REQUIRED gstreamer-app-1.0)

# Include directories

include_directories(

${CMAKE_CURRENT_SOURCE_DIR}

${LIBRKNNRT_INCLUDES}

${GSTREAMER_INCLUDE_DIRS}

${GSTREAMER_APP_INCLUDE_DIRS}

)

# Add executable

add_executable(${PROJECT_NAME}

main.cc

postprocess.cc

${rknpu_yolov5_file}

)

# Link libraries

target_link_libraries(${PROJECT_NAME}

imageutils

fileutils

imagedrawing

${LIBRKNNRT}

${GSTREAMER_LIBRARIES}

${GSTREAMER_APP_LIBRARIES}

)

if (CMAKE_SYSTEM_NAME STREQUAL "Android")

target_link_libraries(${PROJECT_NAME}

log

)

endif()

if (CMAKE_SYSTEM_NAME STREQUAL "Linux")

set(THREADS_PREFER_PTHREAD_FLAG ON)

find_package(Threads REQUIRED)

target_link_libraries(${PROJECT_NAME} Threads::Threads)

endif()

# Install targets

install(TARGETS ${PROJECT_NAME} DESTINATION .)

install(FILES ${CMAKE_CURRENT_SOURCE_DIR}/../model/bus.jpg DESTINATION ./model)

install(FILES ${CMAKE_CURRENT_SOURCE_DIR}/../model/coco_80_labels_list.txt DESTINATION ./model)

file(GLOB RKNN_FILES "${CMAKE_CURRENT_SOURCE_DIR}/../model/*.rknn")

install(FILES ${RKNN_FILES} DESTINATION model)

2、修改rknn_model_zoo/examples/yolov5/cpp/main.cc,添加gstreamer采集usb摄像头数据,并通过yolov5模型进行实时目标检测。

main.cc

// Copyright (c) 2023 by Rockchip Electronics Co., Ltd. All Rights Reserved.

//

// Licensed under the Apache License, Version 2.0 (the "License");

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

/*-------------------------------------------

Includes

-------------------------------------------*/

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <signal.h>

#include <gst/gst.h>

#include <linux/videodev2.h>

#include <fcntl.h>

#include <sys/ioctl.h>

#include <unistd.h>

#include <gst/app/app.h>

#include <sys/mman.h>

#include "yolov5.h"

#include "image_utils.h"

#include "file_utils.h"

#include "image_drawing.h"

#if defined(RV1106_1103)

#include "dma_alloc.hpp"

#endif

#define BUFFER_COUNT 4 // 通常使用3-4个缓冲区

struct buffer {

void *start;

size_t length;

};

static struct buffer *buffers = NULL;

static unsigned int n_buffers = 0;

static int init_camera(int fd, unsigned int width, unsigned int height)

{

struct v4l2_format fmt = {0};

struct v4l2_requestbuffers req = {0};

struct v4l2_buffer buf = {0};

// Set format

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = width;

fmt.fmt.pix.height = height;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_NV12;

fmt.fmt.pix.field = V4L2_FIELD_NONE;

if (ioctl(fd, VIDIOC_S_FMT, &fmt) < 0) {

printf("Failed to set format\n");

return -1;

}

// Request buffers

req.count = BUFFER_COUNT;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (ioctl(fd, VIDIOC_REQBUFS, &req) < 0) {

printf("Failed to request buffers\n");

return -1;

}

// Allocate buffers

buffers = (struct buffer*)calloc(req.count, sizeof(*buffers));

if (!buffers) {

printf("Failed to allocate buffers\n");

return -1;

}

// Map buffers

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if (ioctl(fd, VIDIOC_QUERYBUF, &buf) < 0) {

printf("Failed to query buffer\n");

return -1;

}

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start = mmap(NULL,

buf.length,

PROT_READ | PROT_WRITE,

MAP_SHARED,

fd,

buf.m.offset);

if (buffers[n_buffers].start == MAP_FAILED) {

printf("Failed to mmap buffer\n");

return -1;

}

// Queue buffer

if (ioctl(fd, VIDIOC_QBUF, &buf) < 0) {

printf("Failed to queue buffer\n");

return -1;

}

}

// Start streaming

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (ioctl(fd, VIDIOC_STREAMON, &type) < 0) {

printf("Failed to start streaming\n");

return -1;

}

return 0;

}

static void uninit_camera(int fd)

{

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

// Stop streaming

ioctl(fd, VIDIOC_STREAMOFF, &type);

// Unmap buffers

for (unsigned int i = 0; i < n_buffers; ++i) {

munmap(buffers[i].start, buffers[i].length);

}

free(buffers);

}

/*-------------------------------------------

Main Function

-------------------------------------------*/

// Global variables for control

static bool g_running = true;

// Signal handler

static void signal_handler(int signo) {

printf("\n Caught signal, exiting...\n");

g_running = false;

}

int main(int argc, char **argv)

{

int ret = 0;

GstElement *pipeline = NULL;

GstElement *appsink = NULL;

GstElement *appsrc = NULL;

rknn_app_context_t rknn_app_ctx;

if (argc != 4) {

printf("%s <model_path> <device_path> <rtsp_port>\n", argv[0]);

printf("Example: %s model.rknn /dev/video41 8554\n", argv[0]);

return -1;

}

const char *model_path = argv[1];

const char *device_path = argv[2];

const int rtsp_port = atoi(argv[3]);

// Initialize GStreamer

gst_init(&argc, &argv);

// Setup signal handler

signal(SIGINT, signal_handler);

// Initialize RTSP pipeline

char pipeline_str[1024];

snprintf(pipeline_str, sizeof(pipeline_str),

"v4l2src device=%s ! "

"videoconvert ! "

"video/x-raw,format=RGB,width=640,height=480 ! "

"tee name=t ! "

"queue ! appsink name=mysink sync=false max-buffers=1 drop=true "

"t. ! queue ! appsrc name=mysrc format=time is-live=true ! "

"videoconvert ! video/x-raw,format=NV12 ! "

"mpph264enc bps=2000000 ! h264parse ! "

"rtspclientsink location=rtsp://localhost:%d/test sync=false",

device_path, rtsp_port);

pipeline = gst_parse_launch(pipeline_str, NULL);

if (!pipeline) {

printf("Failed to create pipeline\n");

ret = -1;

} else {

// Get elements

appsink = gst_bin_get_by_name(GST_BIN(pipeline), "mysink");

appsrc = gst_bin_get_by_name(GST_BIN(pipeline), "mysrc");

if (!appsink || !appsrc) {

printf("Failed to get elements\n");

ret = -1;

} else {

// Configure appsrc

GstCaps *caps = gst_caps_new_simple("video/x-raw",

"format", G_TYPE_STRING, "RGB",

"width", G_TYPE_INT, 640,

"height", G_TYPE_INT, 480,

"framerate", GST_TYPE_FRACTION, 30, 1,

NULL);

g_object_set(G_OBJECT(appsrc),

"caps", caps,

"format", GST_FORMAT_TIME,

NULL);

gst_caps_unref(caps);

// Set pipeline state to playing

GstStateChangeReturn state_ret = gst_element_set_state(pipeline, GST_STATE_PLAYING);

if (state_ret == GST_STATE_CHANGE_FAILURE) {

printf("Failed to start pipeline\n");

ret = -1;

}

}

}

if (ret == 0) {

memset(&rknn_app_ctx, 0, sizeof(rknn_app_context_t));

init_post_process();

ret = init_yolov5_model(model_path, &rknn_app_ctx);

if (ret != 0) {

printf("init_yolov5_model fail! ret=%d\n", ret);

}

}

if (ret == 0) {

// Main processing loop

GstSample *sample = NULL;

struct timespec last_detect_time = {0, 0};

const int detect_interval_ms = 100; // 10fps for detection

// 预分配 640x640 的缓冲区

size_t model_input_size = 640 * 640 * 3;

unsigned char* model_input_buf = (unsigned char*)malloc(model_input_size);

while (g_running && model_input_buf) {

struct timespec current_time;

clock_gettime(CLOCK_MONOTONIC, ¤t_time);

long diff_ms = (current_time.tv_sec - last_detect_time.tv_sec) * 1000 +

(current_time.tv_nsec - last_detect_time.tv_nsec) / 1000000;

sample = gst_app_sink_pull_sample(GST_APP_SINK(appsink));

if (sample) {

GstBuffer *buffer = gst_sample_get_buffer(sample);

GstMapInfo map;

if (gst_buffer_map(buffer, &map, GST_MAP_READ)) {

// 只在间隔时间到达时执行目标检测

if (diff_ms >= detect_interval_ms) {

// Create image buffer for detection

image_buffer_t src_image;

memset(&src_image, 0, sizeof(image_buffer_t));

src_image.width = 640;

src_image.height = 480;

src_image.format = IMAGE_FORMAT_RGB888;

src_image.size = src_image.width * src_image.height * 3;

src_image.virt_addr = (unsigned char *)map.data;

// 创建目标图像缓冲区

image_buffer_t dst_image;

memset(&dst_image, 0, sizeof(image_buffer_t));

dst_image.width = 640;

dst_image.height = 640;

dst_image.format = IMAGE_FORMAT_RGB888;

dst_image.size = model_input_size;

dst_image.virt_addr = model_input_buf;

// 手动进行图像缩放和填充

// 1. 清空目标缓冲区

memset(dst_image.virt_addr, 114, dst_image.size); // 114 是 YOLOv5 默认的填充值

// 2. 复制并居中放置原始图像

int padding_top = (640 - 480) / 2;

for (int y = 0; y < 480; y++) {

memcpy(dst_image.virt_addr + ((y + padding_top) * 640 * 3),

src_image.virt_addr + (y * 640 * 3),

640 * 3);

}

// 3. 执行目标检测

object_detect_result_list od_results;

memset(&od_results, 0, sizeof(object_detect_result_list));

printf("Processing image: src=%dx%d dst=%dx%d\n",

src_image.width, src_image.height,

dst_image.width, dst_image.height);

ret = inference_yolov5_model(&rknn_app_ctx, &dst_image, &od_results);

if (ret == 0) {

printf("Detected %d objects\n", od_results.count);

// 创建一个新的图像缓冲区用于绘制结果

image_buffer_t display_image;

memset(&display_image, 0, sizeof(image_buffer_t));

display_image.width = src_image.width;

display_image.height = src_image.height;

display_image.format = IMAGE_FORMAT_RGB888;

display_image.size = src_image.size;

display_image.virt_addr = (unsigned char*)malloc(src_image.size);

if (display_image.virt_addr) {

// 复制原始图像

memcpy(display_image.virt_addr, src_image.virt_addr, src_image.size);

// 调整检测框坐标并绘制

for (int i = 0; i < od_results.count; i++) {

object_detect_result *det_result = &(od_results.results[i]);

// 将检测框坐标从640x640映射回640x480

float scale_y = 480.0f / 640.0f;

float box_left = det_result->box.left;

float box_top = (det_result->box.top - padding_top) * scale_y;

float box_right = det_result->box.right;

float box_bottom = (det_result->box.bottom - padding_top) * scale_y;

// 确保坐标在有效范围内

box_left = std::max(0.0f, std::min(box_left, 639.0f));

box_top = std::max(0.0f, std::min(box_top, 479.0f));

box_right = std::max(0.0f, std::min(box_right, 639.0f));

box_bottom = std::max(0.0f, std::min(box_bottom, 479.0f));

// 打印调试信息

printf("Object %d: %s (%.1f%%) box=[%.1f, %.1f, %.1f, %.1f]\n",

i,

coco_cls_to_name(det_result->cls_id),

det_result->prop * 100,

box_left, box_top, box_right, box_bottom);

// 只有当坐标有效时才绘制

if (box_right > box_left && box_bottom > box_top) {

// 使用项目自带的绘制函数

draw_rectangle(&display_image,

(int)box_left,

(int)box_top,

(int)(box_right - box_left),

(int)(box_bottom - box_top),

COLOR_RED, 3); // 增加线条宽度到 3

// 绘制标签背景

int text_padding = 4;

char text[256];

snprintf(text, sizeof(text), "%s %.1f%%",

coco_cls_to_name(det_result->cls_id),

det_result->prop * 100);

// 计算文本位置,确保在图像范围内

int text_y = (int)box_top - 30; // 增加与边框的距离

text_y = std::max(text_y, text_padding); // 确保不超出上边界

// 绘制黑色背景框

draw_rectangle(&display_image,

(int)box_left - text_padding,

text_y - text_padding,

strlen(text) * 15, // 估算文本宽度

30, // 增加文本高度

COLOR_BLACK,

-1); // -1 表示填充矩形

// 绘制文本,增大字号

draw_text(&display_image,

text,

(int)box_left,

text_y,

COLOR_WHITE, // 改为白色文本

12); // 增大字号到 4

}

}

// 创建新的 GStreamer 缓冲区并推送到 appsrc

GstBuffer *out_buffer = gst_buffer_new_allocate(NULL, src_image.size, NULL);

if (out_buffer) {

GstMapInfo out_map;

if (gst_buffer_map(out_buffer, &out_map, GST_MAP_WRITE)) {

// 复制带有检测框的图像

memcpy(out_map.data, display_image.virt_addr, src_image.size);

gst_buffer_unmap(out_buffer, &out_map);

// 设置时间戳

GST_BUFFER_PTS(out_buffer) = GST_BUFFER_PTS(buffer);

GST_BUFFER_DURATION(out_buffer) = GST_BUFFER_DURATION(buffer);

GST_BUFFER_DTS(out_buffer) = GST_CLOCK_TIME_NONE;

// 推送到 appsrc

GstFlowReturn flow_ret = gst_app_src_push_buffer(GST_APP_SRC(appsrc), out_buffer);

if (flow_ret != GST_FLOW_OK) {

printf("Failed to push buffer to appsrc: %d\n", flow_ret);

}

} else {

gst_buffer_unref(out_buffer);

}

}

// 释放显示缓冲区

free(display_image.virt_addr);

}

}

last_detect_time = current_time;

}

gst_buffer_unmap(buffer, &map);

}

gst_sample_unref(sample);

}

usleep(5000); // 5ms

}

// 清理预分配的缓冲区

if (model_input_buf) {

free(model_input_buf);

}

}

// Cleanup

deinit_post_process();

release_yolov5_model(&rknn_app_ctx);

if (appsrc) {

gst_object_unref(appsrc);

}

if (appsink) {

gst_object_unref(appsink);

}

if (pipeline) {

gst_element_set_state(pipeline, GST_STATE_NULL);

gst_object_unref(pipeline);

}

return ret;

}

板端编译

进入rknn_model_zoo目录后,执行如下命令进行编译:

./build-linux.sh -t rk3588 -a aarch64 -d yolov5

编译完成后,在rknn_model_zoo/install/rk3588_linux_aarch64/rknn_yolov5_demo目录下会生成rknn_yolov5_demo可执行文件。

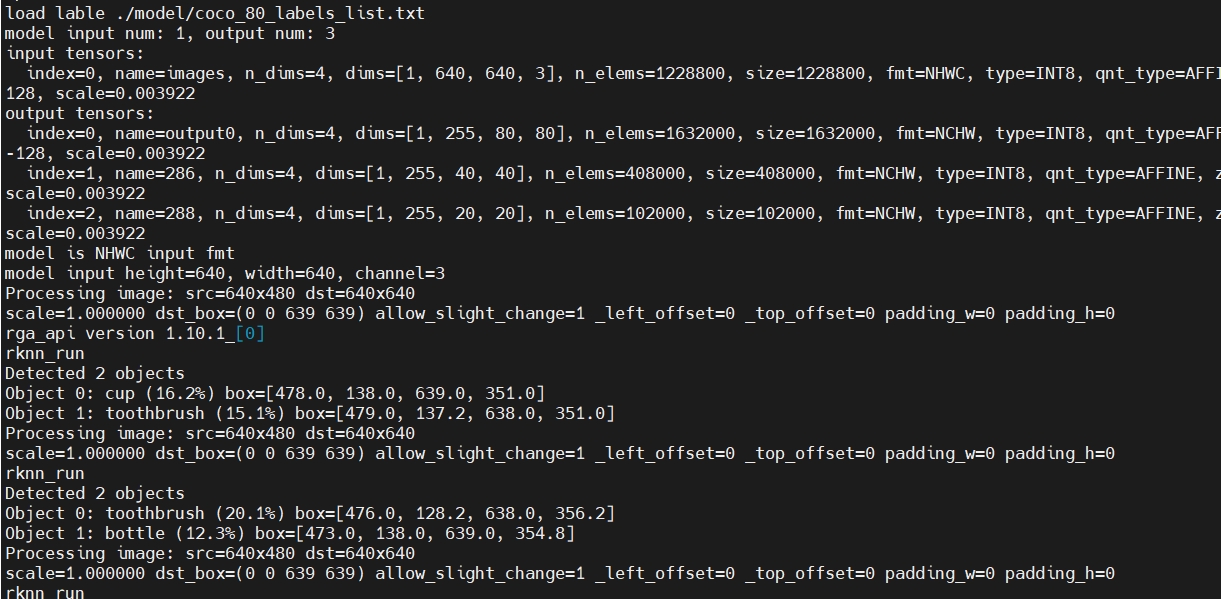

板端运行

在确保rknn模型放到板端,且摄像头设备正常连接的情况下,执行命令运行mediamtx:

./mediamtx

在mediamtx运行后,执行如下命令运行rknn_yolov5_demo:

./rknn_yolov5_demo model/yolov5s.rknn /dev/video41 8554

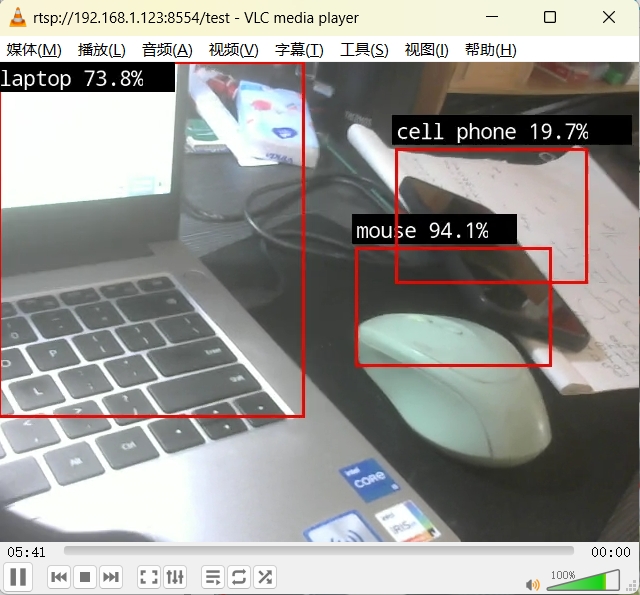

通过vlc播放器,输入rtsp://localhost:8554/test,即可看到摄像头画面。

- 如果摄像头没有画面,请检查摄像头是否正常连接。

- 如果画面显示不正常,请检测数据格式是否正确。

- 如果想用其他模型,请修改rknn_yolov5_demo中的模型路径、名称

- 本项目代码仅供参考,如果需要更复杂或稳定的目标检测,请自行修改代码。